AI customer data protection is crucial as businesses increasingly rely on artificial intelligence to enhance customer experiences. Safeguarding sensitive information in AI-driven systems goes beyond traditional security measures—it requires careful management of how data is collected, stored, and used. Understanding the unique risks of AI technologies helps companies implement strategies that minimize exposure to breaches and misuse. From encryption and access controls to ensuring transparency and compliance with regulations like GDPR and CCPA, this guide walks you through key best practices. Whether you’re evaluating data privacy tools or balancing AI performance with ethical concerns, protecting customer data is vital for maintaining trust and meeting legal obligations. This article outlines practical steps to strengthen your AI systems’ data protection, helping you build a privacy-conscious approach while leveraging AI’s potential.

Understanding AI Customer Data Protection

Defining Customer Data in AI Contexts

Customer data in AI contexts encompasses any information collected, processed, or generated through interactions between customers and AI-driven systems. This includes personal identifiers like names, addresses, and contact details, as well as behavioral data such as purchase history, preferences, and engagement patterns. In AI systems, data may also extend to inferred insights, predictive analytics, and biometric information used to enhance personalization or decision-making. Because AI often aggregates and analyzes large datasets from diverse sources, defining customer data requires recognizing both explicit information provided directly by users and implicit data derived from AI’s processing activities. Understanding precisely what constitutes customer data is crucial for establishing appropriate protections and ensuring responsible AI use.

Importance of Data Protection in AI-driven Customer Service

Data protection plays a pivotal role in AI-driven customer service as these systems regularly handle sensitive and personal information to deliver tailored experiences and efficient support. Without robust safeguards, customer data can be vulnerable to unauthorized access, misuse, or accidental exposure, which can harm individuals and erode trust. Proper data protection not only complies with legal requirements but also enhances customer confidence by demonstrating a commitment to privacy and security. Given AI’s ability to automate and scale interactions, any breach or misuse of data risks widespread impact. Therefore, protecting customer information supports both ethical AI deployment and sustained business success by balancing innovation with respect for privacy.

Key Risks and Threats to Customer Data in AI Systems

AI systems face several risks when handling customer data, ranging from technical vulnerabilities to ethical challenges. Common threats include data breaches caused by hacking or system flaws, exposing sensitive customer information. AI’s reliance on large datasets also raises risks of inadvertent data leakage or improper sharing across platforms. Moreover, biases in training data can lead to discriminatory outcomes, compromising fairness and user trust. Privacy risks emerge if AI collects or processes data beyond what customers have consented to, violating regulatory requirements. Additionally, complex AI models may lack transparency, making it difficult to detect misuse or errors. Recognizing these risks helps organizations implement targeted strategies to safeguard customer data throughout the AI lifecycle.

Core Principles and Best Practices for Protecting Customer Data in AI

Data Minimization and Purpose Limitation

Data minimization and purpose limitation are foundational to protecting customer data in AI systems. Collecting only the data necessary for a specific function limits the exposure of sensitive information and reduces the potential impact of breaches. Purpose limitation ensures that data is used strictly for the reasons customers have been informed about and have consented to. For AI-driven customer service, this means designing models and processes to avoid excessive data gathering, focusing instead on relevant and directly related information. Implementing clear data retention schedules tied to specific purposes can further enforce these principles, ensuring data is not kept longer than needed. By adhering to minimization and purpose limitation, organizations reduce complexity in data management and demonstrate respect for customer privacy from the outset.

Implementing Strong Data Encryption and Anonymization

Protecting customer data in AI systems requires robust encryption and anonymization strategies. Encrypting data both at rest and in transit safeguards it from unauthorized access and interception. This is especially critical when data moves between AI components, cloud services, or external vendors. Anonymization techniques, such as data masking or tokenization, help prevent the identification of individuals during AI training and analysis. When complete anonymization isn’t feasible, pseudonymization can reduce risk by replacing identifying fields with artificial identifiers. Together, encryption and anonymization form a vital defense layer, ensuring that even if data exposure occurs, sensitive details remain inaccessible or unusable by attackers.

Access Controls and Authentication Mechanisms

Restricting access to customer data within AI environments minimizes potential misuse and breaches. Implementing role-based access control (RBAC) ensures that only authorized personnel can interact with sensitive information. Authentication mechanisms like multi-factor authentication (MFA) add an extra layer of security by requiring multiple forms of verification before granting access. Limiting administrative privileges and regularly reviewing access rights further tighten control measures. For AI systems, where data may flow across numerous components, maintaining strict access policies helps protect data integrity and confidentiality. Automated access monitoring can identify unusual activities early, enabling swift response to potential threats.

Regular Auditing and Monitoring of AI Data Usage

Continuous auditing and monitoring of AI data usage play a critical role in identifying anomalies and maintaining compliance with data protection policies. Regular audits assess whether data collection, processing, and storage align with stated purposes and legal requirements. Monitoring tools can track data access patterns and flag unusual or unauthorized operations, providing early warnings of breaches or misuse. Logs generated from these activities support accountability and transparency, as well as forensic investigations if incidents occur. Establishing a routine schedule for these assessments ensures that AI systems remain compliant and that data protection measures evolve alongside emerging threats.

Ensuring Transparency and Customer Consent

Transparency about how customer data is collected, stored, and used by AI systems strengthens trust and supports compliance with privacy laws. Customers should receive clear, concise information on data practices before consenting, including any AI involvement in decision-making. Obtaining explicit consent for specific uses and offering easy options to withdraw consent are crucial elements. Transparent privacy policies and user-friendly communication tools help customers understand their rights and the value of sharing data. Moreover, organizations should document all consent-related interactions to demonstrate compliance. By prioritizing openness, companies foster positive customer relationships and encourage responsible AI data practices.

Legal and Regulatory Compliance in AI Data Privacy

Overview of Relevant Data Protection Regulations (e.g., GDPR, CCPA)

Navigating data protection regulations is a fundamental aspect of AI customer data protection. The General Data Protection Regulation (GDPR) in the European Union sets a global benchmark with its comprehensive requirements for data processing, including principles such as lawfulness, fairness, and transparency. GDPR mandates strict consent mechanisms, the right to access and erase data, and breach notification protocols. Similarly, the California Consumer Privacy Act (CCPA) enhances consumer rights in the U.S., emphasizing transparency and control over personal data for California residents. Other regional laws like Brazil's LGPD and Canada’s PIPEDA also impose robust standards. Companies using AI must understand these regulations' geographic scopes and specific provisions, especially concerning automated decision-making and profiling, to ensure compliance and protect customer data effectively.

Compliance Challenges Specific to AI Customer Data

Applying data regulations to AI-driven systems presents unique challenges. AI models often require large volumes of personal data for training, which can conflict with principles like data minimization and purpose limitation. Moreover, AI systems can generate insights or make decisions that are difficult to interpret, complicating transparency and accountability obligations under many data protection laws. Ensuring informed customer consent becomes complex when AI processes data in dynamic or opaque ways. Additionally, keeping track of data lineage and ensuring the right to correction or deletion can be complicated due to the nature of AI models. Organizations must address these challenges by incorporating privacy by design, documenting AI decision processes, and continuously monitoring data flows within AI workflows to maintain regulatory compliance.

Aligning AI Practices with Industry Standards and Frameworks

Beyond legal mandates, adherence to industry standards and privacy frameworks reinforces AI data protection efforts. Frameworks such as ISO/IEC 27001 for information security management and ISO/IEC 27701 for privacy information management provide structured approaches to securing personal data in AI implementations. The NIST Privacy Framework offers guidelines tailored for emerging technologies like AI, focusing on building privacy risk management programs. Companies can also look to principles established by organizations like the IEEE and partnerships such as the Partnership on AI, which promote ethical AI development encompassing fairness, transparency, and user control. Aligning AI practices with these frameworks encourages accountability and fosters trust, ensuring AI systems not only comply with regulations but also meet evolving expectations for responsible data use.

Incorporating AI-specific Privacy Considerations

Understanding the Unique Privacy Risks of AI Technologies

AI technologies introduce distinct privacy challenges that differ significantly from traditional systems. One primary concern is the complex nature of AI algorithms, which often operate as “black boxes,” making it difficult to trace how customer data is processed and decisions are made. This opacity can obscure data misuse or inadvertent leaks. Additionally, AI models frequently require large datasets for training, increasing the risk of exposure from extensive data aggregation. The use of sensitive personal information, such as biometric data or behavioral patterns, heightens the potential impact of privacy breaches. Furthermore, AI’s ability to infer sensitive information not explicitly provided by users may lead to privacy violations. Understanding these risks requires a nuanced approach that recognizes both the technical and ethical dimensions of AI data handling.

AI's Use of Personal Data Including Purpose and Limitation

Clear delineation of purpose and limitation is critical when using personal data within AI systems. Organizations must define and document exactly why customer data is being collected and how it will be used, aligning with privacy principles like purpose limitation to ensure data is not repurposed without consent. This means abstaining from secondary uses that customers have not agreed to, thereby reducing unnecessary exposure. Purpose-driven data collection also supports compliance with regulations such as GDPR, which mandate transparency and lawful processing grounds. Limiting data use encourages more responsible AI development and deployment, preserving privacy while enabling AI to deliver personalized services. Establishing these boundaries helps build trust and ensures AI applications align with ethical standards and user expectations.

Managing Data Collection and Storage Reporting

Effective management of how data is collected, stored, and reported is vital for safeguarding customer privacy in AI environments. This includes implementing rigorous data governance practices to track data provenance, consent status, and permission scopes throughout the AI lifecycle. Proper reporting mechanisms must offer transparency to regulators and customers alike, detailing what data is held, its source, and usage patterns. Data retention policies are equally important to prevent unnecessary storage, reducing attack surfaces and complying with privacy norms. Automated audit logs and reporting tools enhance accountability by flagging any unauthorized access or anomalies quickly. By maintaining clear and verifiable records of data handling activities, organizations can demonstrate compliance, respond promptly to inquiries, and continuously improve their privacy posture.

Evaluating Technologies and Tools for AI Customer Data Protection

Data Privacy Enhancing Technologies (PETs)

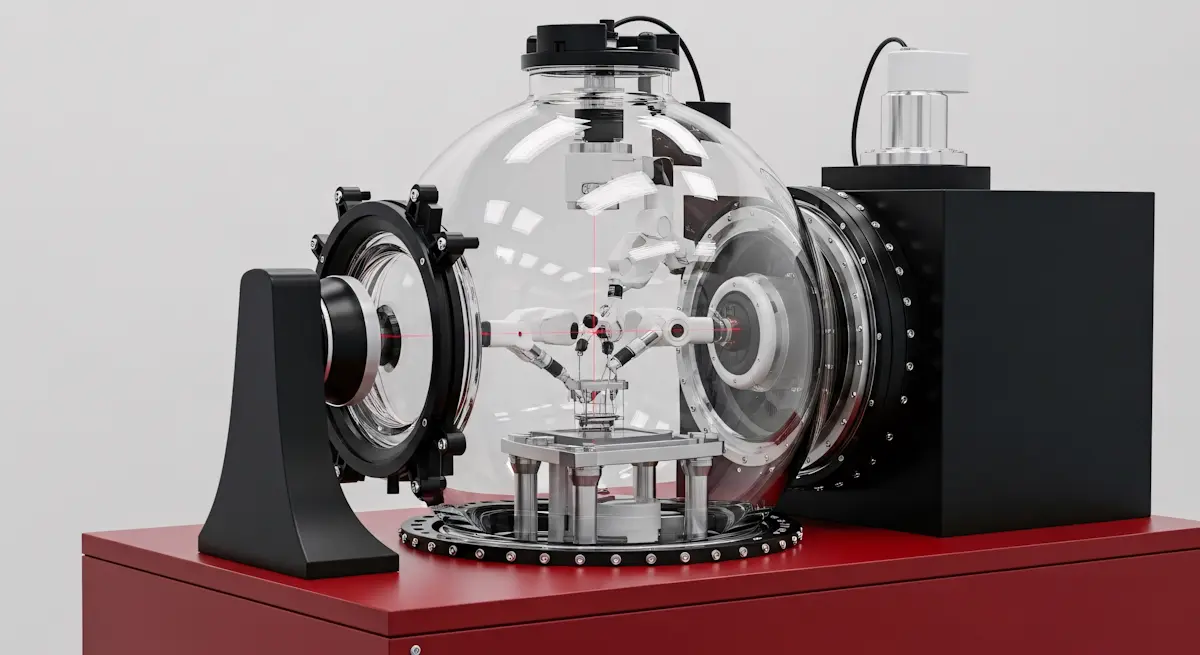

Data Privacy Enhancing Technologies (PETs) are essential tools designed to safeguard personal data throughout AI processing while allowing effective analytics and machine learning. Techniques such as differential privacy, homomorphic encryption, and secure multi-party computation enable organizations to analyze datasets and train AI models without exposing identifiable customer information. For example, differential privacy adds carefully calibrated noise to datasets, making it difficult to re-identify individuals while preserving overall data trends. Homomorphic encryption allows computations on encrypted data, ensuring that sensitive information remains confidential even during processing. Incorporating PETs helps businesses comply with data protection laws by minimizing risks related to data leakage and unauthorized access. PETs provide a robust foundation for balancing the analytical power of AI with stringent privacy requirements, making them a cornerstone of modern customer data protection strategies.

AI Transparency and Explainability Tools

Transparency and explainability tools address a critical aspect of AI customer data protection by shedding light on how AI models use data and make decisions. These tools generate interpretable explanations of AI behavior, helping organizations verify that data usage aligns with privacy policies and regulatory mandates. For instance, techniques like feature importance analysis, model-agnostic explanation frameworks (e.g., LIME, SHAP), and visualization methods allow stakeholders to understand AI decisions and detect potential biases or privacy concerns. By making AI systems more interpretable, these tools foster trust among customers and regulators. They also support audits and impact assessments necessary for compliance, providing clarity around the handling of sensitive customer data. Explainability ensures that AI-driven customer interactions remain transparent, accountable, and respectful of individual privacy rights.

Security Solutions Tailored for AI Systems

AI systems require specialized security solutions to protect customer data at every stage—from data ingestion to model deployment. These solutions include advanced encryption protocols for data at rest and in transit, secure data storage architectures, and access controls fine-tuned for AI environments. Additionally, AI-specific threat detection tools monitor for anomalies indicating data tampering, model theft, or adversarial attacks aimed at compromising data integrity or confidentiality. Techniques like federated learning, which distribute model training without exposing raw data, also enhance security by reducing centralized data risks. Tailored security frameworks integrate continuous vulnerability assessments and patch management to address the evolving threat landscape affecting AI infrastructures. Deploying robust security measures that consider AI’s unique characteristics is crucial for maintaining the confidentiality and integrity of customer data in AI-driven applications.

Overcoming Challenges in Protecting Customer Data with AI

Addressing Data Bias and Ethical Concerns

Data bias poses a significant challenge in AI-driven customer service, as skewed or unrepresentative datasets can lead to unfair outcomes and discrimination. Mitigating bias requires careful dataset selection, ensuring diversity and inclusivity in the data used to train AI models. Ethical concerns also extend to the potential misuse of data or AI decisions that adversely affect customer groups. Organizations should establish clear ethical guidelines and accountability measures for AI systems, including regular bias audits and third-party evaluations. Transparency about how AI models make decisions helps build trust and provides customers with avenues to address concerns. By fostering an ethical framework, companies can avoid perpetuating harmful biases and maintain compliance with evolving societal expectations and regulations.

Managing Data Quality and Integrity

Maintaining high-quality, accurate data is essential for protecting customer information and ensuring AI systems operate reliably. Poor data quality can lead to inappropriate AI outputs, increasing the risk of privacy breaches or erroneous decisions affecting customers. Implementing robust data validation techniques at every stage—from collection to processing—is critical. Data integrity checks prevent unauthorized alterations and ensure consistency across AI workflows. Organizations should also establish systematic processes for detecting anomalies and rectifying errors quickly. Employing data governance frameworks that establish clear ownership and maintain audit trails supports these efforts. Prioritizing data quality helps AI systems deliver trustworthy results while safeguarding sensitive customer data from exposure due to errors or inconsistencies.

Balancing AI Performance with Privacy Requirements

Striking the right balance between AI functionality and stringent privacy safeguards can be difficult. Enhanced privacy measures such as data minimization, encryption, or anonymization may limit the volume or granularity of data accessible, potentially impacting AI performance and insights. Organizations need to evaluate privacy-preserving techniques that allow AI models to function effectively without compromising data security. Techniques like federated learning or differential privacy enable AI to learn from data patterns while protecting individual identities. Aligning AI design with the principle of privacy by design ensures that privacy requirements are integrated early in development, rather than as an afterthought. This balance not only supports regulatory compliance but also strengthens customer trust in how their data is handled within AI systems.

Vulnerability Management in AI Systems

AI systems introduce unique security vulnerabilities due to their complexity and reliance on large datasets, creating novel attack surfaces. Threats may include adversarial attacks that manipulate AI outputs, data poisoning, or unauthorized access to sensitive training data. Effective vulnerability management requires a proactive approach combining regular security assessments, penetration testing, and continuous monitoring tailored to AI environments. Patch management and timely updates are essential to defend against emerging threats targeting AI components. Additionally, collaboration between cybersecurity and AI development teams ensures that security considerations are woven into the entire AI lifecycle. Establishing incident response plans specific to AI-related breaches prepares organizations to act swiftly, minimizing impact on customer data and reinforcing overall resilience.

Impact on Business: ROI and Customer Trust

Measuring the Benefits of Robust Data Protection in AI

Investing in strong customer data protection within AI systems can lead to measurable business advantages. Robust protections reduce the likelihood of data breaches, which in turn minimizes potential financial losses from fines, remediation costs, and legal actions. Additionally, secure AI systems often enable smoother operations by preventing disruptions caused by security incidents. Beyond direct cost savings, companies gain a competitive edge by demonstrating a commitment to data privacy—a factor increasingly influencing customer decisions. Tracking improvements in customer retention rates, loyalty, and positive brand perception offers tangible metrics reflecting the returns on data protection investments. Businesses may also see efficiency gains from streamlined compliance processes and reduced overhead in managing data privacy risks. Collectively, these factors reinforce how protecting customer data in AI not only fulfills ethical and legal obligations but also contributes to sustainable growth and profitability.

Risks and Costs of Non-Compliance or Data Breaches

Failing to adequately safeguard customer data in AI systems can expose organizations to significant consequences. Regulatory bodies impose heavy fines for non-compliance with frameworks like GDPR or CCPA, sometimes reaching millions of dollars. Data breaches can erode customer trust instantly, leading to churn and damage to brand reputation that may take years to recover. The financial impact includes direct costs such as incident response and forensic investigations, as well as indirect costs involving loss of business opportunities and increased scrutiny from regulators. In some cases, companies face lawsuits from affected customers or partners. Moreover, operational disruptions caused by cyberattacks can impact AI system functionality, affecting service delivery and decision-making processes. These risks underline the importance of proactive measures to mitigate vulnerabilities and maintain compliance, safeguarding both the business and its customer relationships.

Building Customer Confidence through Privacy-First AI Practices

Adopting a privacy-first approach to AI customer data protection is a powerful way to strengthen customer confidence. Transparent communication about how AI systems collect, use, and protect personal data reassures customers that their information is handled responsibly. Giving customers control over their data through clear consent processes and easy opt-out options enhances trust and fosters long-term loyalty. Companies that visibly prioritize privacy often differentiate themselves in crowded markets, appealing to privacy-conscious consumers and business partners. Demonstrating adherence to best practices and compliance standards publicly also helps establish credibility and authority. Encouraging a culture where privacy is embedded into all AI-related activities signals to customers that the organization values ethical data stewardship, turning privacy protection from a compliance necessity into a key dimension of the customer experience.

Implementing a Customer Data Protection Strategy for AI Systems

Steps to Assess Current Data Protection Readiness

Assessing your organization’s current data protection readiness is a foundational step toward securing customer data in AI systems. Begin by conducting a comprehensive data inventory, identifying what customer data is collected, stored, processed, and shared across AI platforms. Evaluate how this data flows within your systems and pinpoint any gaps or vulnerabilities. Next, review existing policies and controls surrounding data access, encryption, anonymization, and retention to determine their effectiveness in the context of AI-driven processes. It’s also important to assess the level of awareness and training your teams have regarding privacy regulations and AI-specific risks. Utilizing risk assessment frameworks tailored for AI environments can help quantify exposure and prioritize remediation efforts. Finally, benchmarking your practices against industry standards and regulatory requirements will highlight areas needing improvement. This thorough readiness evaluation provides a clear roadmap for strengthening your AI customer data protection strategy.

Developing Policies and Training for AI and Data Teams

Strong policies specifically addressing AI and customer data protection are essential for guiding teams in responsible data handling. Start by crafting clear, detailed guidelines that define acceptable data collection, usage, sharing, and storage practices tailored to AI applications. Policies should incorporate principles such as data minimization, purpose limitation, transparency, and consent management. Equally important is setting out procedures to detect and respond to data breaches and model biases. Beyond documentation, training programs tailored for AI developers, data scientists, and privacy officers equip them with the knowledge to implement these policies effectively. Regular workshops, scenario-based learning, and updates reflecting evolving regulations keep teams informed about new threats and compliance requirements. Embedding this continuous learning culture ensures your personnel can proactively identify risks and uphold ethical data practices in AI projects.

Integrating Data Protection into AI Development Lifecycles

Embedding data protection into every stage of the AI development lifecycle helps prevent privacy risks before they arise. This means integrating privacy by design principles from initial data collection through model training, testing, deployment, and ongoing monitoring. During data acquisition, focus on collecting only necessary information with explicit consent and ensure secure storage using encryption or anonymization techniques. Throughout model development, apply techniques that safeguard customer identities, such as differential privacy or federated learning where appropriate. Before deployment, conduct thorough privacy impact assessments and ensure mechanisms exist for data access controls and audit trails. Post-deployment, continuous monitoring for data anomalies or unauthorized access supports rapid incident response. Collaborating cross-functionally between AI engineers, security teams, and compliance experts is vital to align technical measures with legal and ethical standards. Integrating these practices helps build AI systems that respect customer privacy while delivering value.

Taking Action to Safeguard Customer Data in Your AI Systems

Practical Tips for Immediate Improvements

To quickly strengthen AI customer data protection, begin with straightforward yet effective steps. First, ensure data collected is strictly necessary for AI operations—eliminate any excess information that doesn’t serve a clear purpose. Next, apply encryption protocols both in transit and at rest to shield data from unauthorized access. Implement multi-factor authentication for all AI system access points to add a layer of security beyond passwords. Review and tighten access controls, granting permissions only on a need-to-know basis. Regularly update AI software and underlying infrastructure to patch vulnerabilities. Introduce logging and monitoring to detect anomalous behaviors early. Finally, educate your staff on data protection principles and operational procedures, so they understand their role in safeguarding customer information within AI workflows.

Resources and Tools to Support Your Data Protection Journey

Several specialized resources and tools can assist in protecting customer data within AI systems. Privacy-enhancing technologies (PETs) such as differential privacy and homomorphic encryption enable data analysis while limiting exposure of raw customer data. Tools for AI explainability help pinpoint how models use customer information, facilitating audits and compliance checks. Security platforms designed for AI environments offer capabilities like behavior analytics and real-time threat detection tailored to these systems. Additionally, regulatory compliance software can streamline adherence to laws such as GDPR and CCPA by automating data subject requests and consent management. To stay current, engage with industry groups or forums focused on AI ethics and privacy, which provide best practices and evolving standards for AI data protection.

Encouraging a Culture of Privacy and Security Awareness

Embedding a privacy-conscious mindset across an organization is essential to reinforce AI customer data protection over time. Leadership should champion transparency about data practices and clearly communicate privacy goals and policies. Regular training sessions tailored to different team roles ensure employees understand both the risks and their responsibilities related to AI data handling. Foster open communication channels for reporting potential issues without fear of retaliation. Recognize and reward proactive behaviors that enhance data protection. Incorporate privacy impact assessments and secure design principles into development workflows, making data protection an integral part of AI innovation rather than a separate afterthought. Cultivating this culture helps organizations adapt more readily to emerging threats and regulatory changes, ultimately building stronger trust with customers.

How Cobbai Addresses Key Challenges in AI Customer Data Protection

Protecting customer data while using AI in customer service demands a thoughtful balance of automation benefits and strict privacy practices. Cobbai’s platform is designed with this balance in mind, integrating data protection measures into every layer of its AI-native helpdesk. By unifying AI agents with a centralized knowledge system and secure communication channels, Cobbai minimizes data exposure risks through precise access controls and purpose-limited processing. For example, AI agents like Front and Companion assist with conversations and drafting responses without storing unnecessary personal data long-term, supporting the principle of data minimization.Cobbai’s built-in governance tools empower support teams to specify and continuously monitor AI behavior, helping ensure compliance with internal policies and external regulations such as GDPR and CCPA. Transparency is also supported by clear audit trails and AI performance evaluation, allowing teams to track how customer data is used during automated interactions. This level of oversight is crucial for regular auditing and vulnerability management, two core practices for data security in AI-powered environments.The integration of Cobbai Knowledge Hub consolidates support content while providing AI-ready indexing that anonymizes sensitive information during training or retrieval processes. This reduces risks associated with unauthorized data access or misuse. Additionally, Cobbai VOC and Topics provide insights from customer interactions while respecting privacy boundaries, enabling companies to identify trends without compromising individual data security.By combining autonomous AI capabilities with configurable data governance and secure, centralized architecture, Cobbai helps customer service teams proactively address the distinct privacy challenges associated with AI. This approach delivers both operational efficiency and robust protections that foster trust with customers and regulators alike.